cd /usr/local/ #上传spark tar xf spark-2.0.2-bin-hadoop2.7.tgz mv spark-2.0.2-bin-hadoop2.7 spark vim /etc/profile export SPARK_HOME=/usr/local/spark :$SPARK_HOME/bin source /etc/profile cd spark/conf/ mv spark-env.sh.template spark-env.sh mv slaves.template slaves mv spark-defaults.conf.template spark-defaults.conf #修改配置文件 vim spark-env.sh export SCALA_HOME=/usr/scala export SPARK_HOME=/usr/local/spark export HADOOP_HOME=/usr/local/hadoop export JAVA_HOME=/usr/java export SPARK_WORKER_MEMORY=1g export SPARK_MASTER_IP=hadoop export SPARK_WORKER_CORES=2 ################################ cd ../sbin/ #编写启动脚本 vim start-spark.sh #!/bin/bash /usr/local/spark/sbin/start-all.sh #保存退出 chmod 755 start-spark.sh vim /etc/profile :$SPARK_HOME/sbin source /etc/profile cp start-spark.sh stop-spark.sh vim stop-spark.sh #!/bin/bash /usr/local/spark/sbin/stop-all.sh chmod 755 stop-spark.sh #启动spark start-all.sh start-spark.sh spark-shell

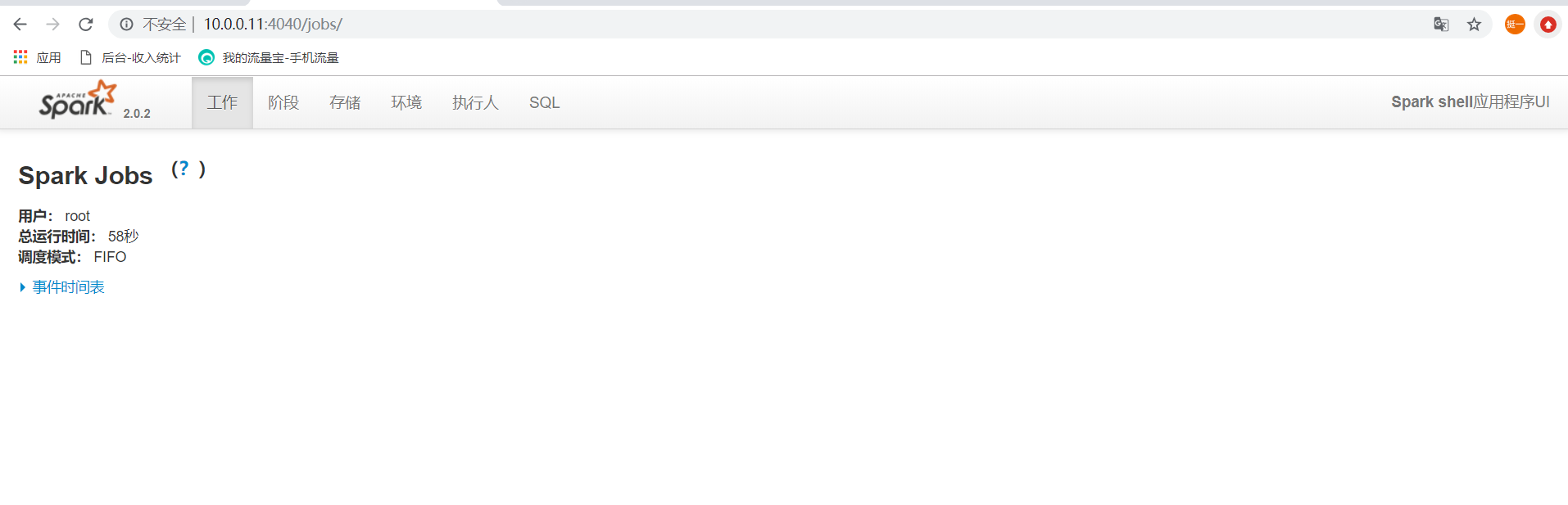

浏览器访问http://10.0.0.11:8080